@ahopkins

I am not sure if I can help with a reproducible code as this looks like a race condition between monitor and shutdown operations.

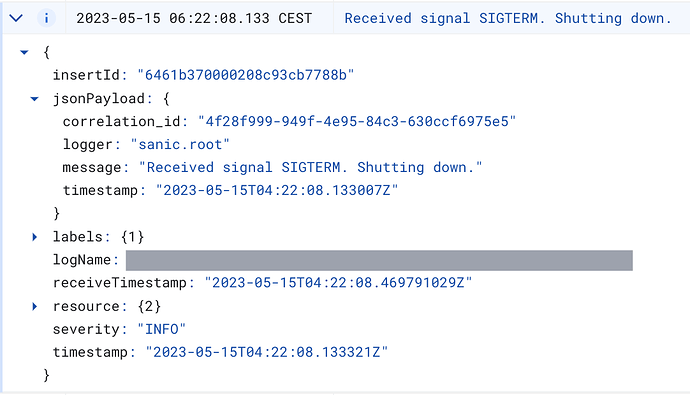

Server Logs:

timestamp,severity,textPayload

2023-04-01T14:37:05.977581Z,INFO,[pid1-app] Exit 0 app (pid 11): /bin/sh -c exec sanic main:app --port=${PORT:-8080} --no-motd --no-access-logs --workers 4 [session:HKBFS3X]

2023-04-01T14:37:03.488038Z,,[INFO] [24] [Sanic-Server-2-0] sanic.server: Stopping worker [24]

2023-04-01T14:37:03.488028Z,,[INFO] [23] [Sanic-Server-1-0] sanic.server: Stopping worker [23]

2023-04-01T14:37:03.488017Z,,[INFO] [25] [Sanic-Server-3-0] sanic.server: Stopping worker [25]

2023-04-01T14:37:03.487999Z,,[INFO] [22] [Sanic-Server-0-0] sanic.server: Stopping worker [22]

2023-04-01T14:37:03.479585Z,,[INFO] [11] [MainProcess] sanic.root: Server Stopped

2023-04-01T14:37:03.479572Z,,KeyError: 'Sanic-Server-3-0'

2023-04-01T14:37:03.479337Z,," raise convert_to_error(kind, result)"

2023-04-01T14:37:03.479312Z,," File ""/layers/google.python.runtime/python/lib/python3.11/multiprocessing/managers.py"", line 837, in _callmethod"

2023-04-01T14:37:03.479266Z,," File ""<string>"", line 2, in __getitem__"

2023-04-01T14:37:03.479250Z,," ~~~~~~~~~~~~~~~~~^^^^^^^^^^^^^^"

"Traceback (most recent call last):,ERROR

in _sync_states

2023-04-01T14:37:03.479238Z

2023-04-01T14:37:03.479213Z,,[ERROR] [11] [MainProcess] sanic.root: KeyError occurred while synchronizing worker states. err='Sanic-Server-3-0'

2023-04-01T14:37:03.479198Z,,KeyError: 'Sanic-Server-2-0'

2023-04-01T14:37:03.478801Z,," raise convert_to_error(kind, result)"

2023-04-01T14:37:03.478790Z,," File ""/layers/google.python.runtime/python/lib/python3.11/multiprocessing/managers.py"", line 837, in _callmethod"

2023-04-01T14:37:03.478777Z,," File ""<string>"", line 2, in __getitem__"

2023-04-01T14:37:03.478764Z,," ~~~~~~~~~~~~~~~~~^^^^^^^^^^^^^^"

"Traceback (most recent call last):,ERROR

in _sync_states

2023-04-01T14:37:03.478754Z

2023-04-01T14:37:03.478730Z,,[ERROR] [11] [MainProcess] sanic.root: KeyError occurred while synchronizing worker states. err='Sanic-Server-2-0'

2023-04-01T14:37:03.478716Z,,KeyError: 'Sanic-Server-1-0'

2023-04-01T14:37:03.478074Z,," raise convert_to_error(kind, result)"

2023-04-01T14:37:03.478062Z,," File ""/layers/google.python.runtime/python/lib/python3.11/multiprocessing/managers.py"", line 837, in _callmethod"

2023-04-01T14:37:03.478049Z,," File ""<string>"", line 2, in __getitem__"

2023-04-01T14:37:03.478031Z,," ~~~~~~~~~~~~~~~~~^^^^^^^^^^^^^^"

"Traceback (most recent call last):,ERROR

in _sync_states

2023-04-01T14:37:03.478022Z

2023-04-01T14:37:03.477998Z,,[ERROR] [11] [MainProcess] sanic.root: KeyError occurred while synchronizing worker states. err='Sanic-Server-1-0'

2023-04-01T14:37:03.477982Z,,KeyError: 'Sanic-Server-0-0'

2023-04-01T14:37:03.477269Z,," raise convert_to_error(kind, result)"

2023-04-01T14:37:03.477256Z,," File ""/layers/google.python.runtime/python/lib/python3.11/multiprocessing/managers.py"", line 837, in _callmethod"

2023-04-01T14:37:03.477240Z,," File ""<string>"", line 2, in __getitem__"

2023-04-01T14:37:03.477218Z,," ~~~~~~~~~~~~~~~~~^^^^^^^^^^^^^^"

"Traceback (most recent call last):,ERROR

in _sync_states

2023-04-01T14:37:03.477207Z

2023-04-01T14:37:03.477174Z,,[ERROR] [11] [MainProcess] sanic.root: KeyError occurred while synchronizing worker states. err='Sanic-Server-0-0'

2023-04-01T14:37:03.468257Z,INFO,[pid1-nginx] Exit 0 nginx (pid 18): /usr/sbin/nginx -c /tmp/nginxconf-2913916072/nginx.conf [session:HKBFS3X]

2023-04-01T14:37:03.467932Z,,[INFO] [11] [MainProcess] sanic.root: Terminating a process: Sanic-Server-3-0 [25]

2023-04-01T14:37:03.466299Z,,[INFO] [11] [MainProcess] sanic.root: Terminating a process: Sanic-Server-2-0 [24]

2023-04-01T14:37:03.464831Z,,[INFO] [11] [MainProcess] sanic.root: Terminating a process: Sanic-Server-1-0 [23]

2023-04-01T14:37:03.461914Z,,[INFO] [11] [MainProcess] sanic.root: Terminating a process: Sanic-Server-0-0 [22]

2023-04-01T14:37:03.461724Z,,[INFO] [11] [MainProcess] sanic.root: Received signal SIGTERM. Shutting down.

2023-04-01T14:37:03.457982Z,INFO,[pid1-app] Propagating terminated signal to app (pid 11) [session:HKBFS3X]

2023-04-01T14:37:03.457973Z,INFO,[pid1-nginx] Propagating terminated signal to nginx (pid 18) [session:HKBFS3X]

2023-04-01T14:37:03.457963Z,INFO,[pid1] Waiting 5s to allow shutdown processing [session:HKBFS3X]

2023-04-01T14:37:03.457949Z,INFO,[pid1] Sending terminated signal to processes [session:HKBFS3X]

2023-04-01T14:37:03.457025Z,INFO,[pid1] Received terminated signal [session:HKBFS3X]

2023-04-01T14:36:04.183927Z,,[INFO] [24] [Sanic-Server-2-0] GAE-APP: initializing app configs

2023-04-01T14:35:58.441111Z,,[INFO] [23] [Sanic-Server-1-0] GAE-APP: initializing app configs

2023-04-01T14:35:57.999842Z,,[INFO] [22] [Sanic-Server-0-0] GAE-APP: initializing app configs

2023-04-01T14:35:56.962331Z,,[INFO] [25] [Sanic-Server-3-0] GAE-APP: initializing app configs

2023-04-01T14:35:56.931374Z,,[INFO] [22] [Sanic-Server-0-0] sanic.server: Starting worker [22]

2023-04-01T14:35:56.924851Z,,[INFO] [23] [Sanic-Server-1-0] sanic.server: Starting worker [23]

2023-04-01T14:35:56.923972Z,,[INFO] [25] [Sanic-Server-3-0] sanic.server: Starting worker [25]

2023-04-01T14:35:56.923941Z,,[INFO] [24] [Sanic-Server-2-0] sanic.server: Starting worker [24]

2023-04-01T14:35:55.382907Z,INFO,[pid1-nginx] Starting nginx (pid 18): /usr/sbin/nginx -c /tmp/nginxconf-2913916072/nginx.conf [session:HKBFS3X]

2023-04-01T14:35:55.375349Z,INFO,[pid1-nginx] Successfully connected to localhost:8081 after 1.268327928s [session:HKBFS3X]

2023-04-01T14:35:54.110717Z,INFO,[pid1-app] Starting app (pid 11): /bin/sh -c exec sanic main:app --port=${PORT:-8080} --no-motd --no-access-logs --workers 4 [session:HKBFS3X]

2023-04-01T14:35:54.106710Z,INFO,[pid1-nginx] nginx waiting for any of 3 prerequisite(s): [portbind:tcp:localhost:8080 portbind:tcp:localhost:8081 portbind:unix:/tmp/google-config/app.sock] [session:HKBFS3X]

2023-04-01T14:35:54.106298Z,INFO,"[pid1-app] app has no prerequisites, starting immediately [session:HKBFS3X]"

2023-04-01T14:35:54.106279Z,INFO,[pid1] Starting processes [app nginx] [session:HKBFS3X]

2023-04-01T14:35:54.105804Z,DEBUG,"[pid1-app] Using app start info from /srv/.googleconfig/app_start.json: &main.appStart{Entrypoint:struct { Type string ""json:\""type\""""; UnparsedValue string ""json:\""unparsed_value\""""; Command string ""json:\""command\""""; WorkDir string ""json:\""workdir\"""" }{Type:""User"", UnparsedValue:"""", Command:""sanic main:app --port=${PORT:-8080} --no-motd --no-access-logs --workers 4"", WorkDir:""""}, EntrypointFromAppYAML:"""", EntrypointContents:"""", Runtime:""python311""} [session:HKBFS3X]"

2023-04-01T14:35:54.100296Z,INFO,[pid1] started [session:HKBFS3X]

Looking at the implementation of WorkerManager and WorkerProcess implementation, it seems like they share the same worker_state object. So, it’s obvious to see KeyError if you are calling WorkerProcess#set_state right after seeing KeyError at WorkerManager#_sync_states. I think we should do something like this to handle this gracefully.

--- a/sanic/worker/process.py

+++ b/sanic/worker/process.py

@@ -37,10 +37,11 @@ class WorkerProcess:

if not force and state < self.state:

raise Exception("...")

self.state = state

- self.worker_state[self.name] = {

- **self.worker_state[self.name],

- "state": self.state.name,

- }

+ if self.name in self.worker_state:

+ self.worker_state[self.name] = {

+ **self.worker_state[self.name],

+ "state": self.state.name,

+ }

Alternatively, the sync task can be skipped if the server is shutting down. I don’t know if it has any adverse effects.

--- a/sanic/worker/manager.py

+++ b/sanic/worker/manager.py

@@ -293,10 +293,10 @@ class WorkerManager:

self.shutdown()

def shutdown(self):

+ self._shutting_down = True

for process in self.processes:

if process.is_alive():

process.terminate()

- self._shutting_down = True

@property

def pid(self):

@@ -311,11 +311,8 @@ class WorkerManager:

return all(acked) and len(acked) == self.num_server

def _sync_states(self):

- for process in self.processes:

- try:

+ if not self._shutting_down:

+ for process in self.processes:

state = self.worker_state[process.name].get("state")

- except KeyError:

- process.set_state(ProcessState.TERMINATED, True)

- continue

- if state and process.state.name != state:

- process.set_state(ProcessState[state], True)

+ if state and process.state.name != state:

+ process.set_state(ProcessState[state], True)