This sounds like an architectural problem. One solution is to create a pattern where each of your workers talk to each other to know the message has been received by all the other workers. Last message in wins.

Do you need to wait until all workers receive message? I’d personally push the work to some shared queue, the job is moved from queue to in progress, then ack when done. Only one worker should be able to move out of queue, so if another tries it will fail.

Another option is to build a lod balancer. One dedicated worker that pulls from the queue and pushes to a specific worker to perform the operation. I guess the question is how complex do you need the work to be.

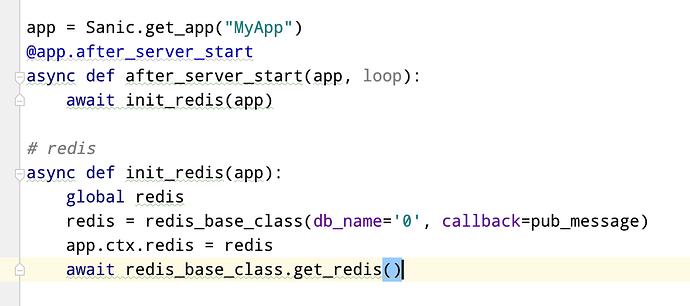

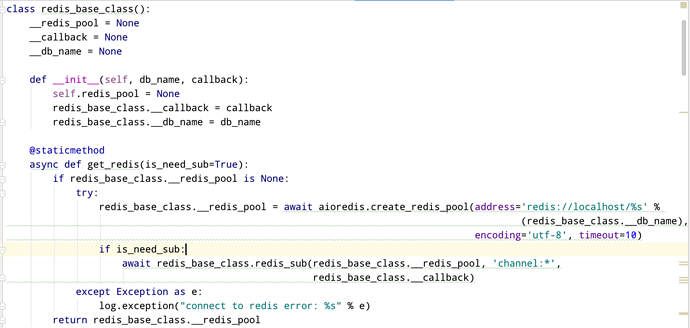

If you intend to only scale with multiple workers from one instance (and not multiple containers) you could look at the solution here: Pushing work to the background of your Sanic app

Or, even if you need a larger scale, the pattern in that article could be adopted to using redis to distribute with the same goal: a work queue and not just messages.